Timings and parallelization

This section summarizes the options DFTK offers to monitor and influence performance of the code.

Timing measurements

By default DFTK uses TimerOutputs.jl to record timings, memory allocations and the number of calls for selected routines inside the code. These numbers are accessible in the object DFTK.timer. Since the timings are automatically accumulated inside this datastructure, any timing measurement should first reset this timer before running the calculation of interest.

For example to measure the timing of an SCF:

DFTK.reset_timer!(DFTK.timer)

scfres = self_consistent_field(basis, tol=1e-5)

DFTK.timer ────────────────────────────────────────────────────────────────────────────────

Time Allocations

─────────────────────── ────────────────────────

Tot / % measured: 328ms / 59.1% 82.6MiB / 76.7%

Section ncalls time %tot avg alloc %tot avg

────────────────────────────────────────────────────────────────────────────────

self_consistent_field 1 194ms 100.0% 194ms 63.3MiB 100.0% 63.3MiB

LOBPCG 27 93.8ms 48.4% 3.47ms 20.4MiB 32.2% 775KiB

DftHamiltonian... 74 62.9ms 32.4% 850μs 6.53MiB 10.3% 90.4KiB

local+kinetic 487 56.4ms 29.1% 116μs 274KiB 0.4% 576B

nonlocal 74 1.15ms 0.6% 15.6μs 1.36MiB 2.1% 18.8KiB

ortho! X vs Y 67 10.5ms 5.4% 157μs 3.25MiB 5.1% 49.6KiB

ortho! 133 2.34ms 1.2% 17.6μs 1.03MiB 1.6% 7.94KiB

preconditioning 74 7.98ms 4.1% 108μs 1.74MiB 2.8% 24.1KiB

rayleigh_ritz 47 4.94ms 2.5% 105μs 1.73MiB 2.7% 37.6KiB

ortho! 47 574μs 0.3% 12.2μs 258KiB 0.4% 5.50KiB

Update residuals 74 803μs 0.4% 10.9μs 1.12MiB 1.8% 15.5KiB

ortho! 27 602μs 0.3% 22.3μs 166KiB 0.3% 6.16KiB

compute_density 9 63.3ms 32.6% 7.03ms 6.44MiB 10.2% 733KiB

symmetrize_ρ 9 55.1ms 28.4% 6.12ms 5.01MiB 7.9% 570KiB

energy_hamiltonian 10 16.0ms 8.3% 1.60ms 14.3MiB 22.5% 1.43MiB

ene_ops 10 14.4ms 7.4% 1.44ms 10.5MiB 16.5% 1.05MiB

ene_ops: xc 10 12.0ms 6.2% 1.20ms 4.70MiB 7.4% 481KiB

ene_ops: har... 10 1.44ms 0.7% 144μs 4.51MiB 7.1% 462KiB

ene_ops: non... 10 275μs 0.1% 27.5μs 146KiB 0.2% 14.6KiB

ene_ops: kin... 10 237μs 0.1% 23.7μs 92.5KiB 0.1% 9.25KiB

ene_ops: local 10 148μs 0.1% 14.8μs 938KiB 1.4% 93.8KiB

energy 9 13.5ms 7.0% 1.51ms 9.44MiB 14.9% 1.05MiB

ene_ops: xc 9 11.2ms 5.8% 1.25ms 4.23MiB 6.7% 481KiB

ene_ops: hartree 9 1.33ms 0.7% 148μs 4.06MiB 6.4% 462KiB

ene_ops: nonlocal 9 354μs 0.2% 39.3μs 146KiB 0.2% 16.2KiB

ene_ops: kinetic 9 271μs 0.1% 30.1μs 87.2KiB 0.1% 9.69KiB

ene_ops: local 9 154μs 0.1% 17.2μs 845KiB 1.3% 93.8KiB

ortho_qr 3 129μs 0.1% 42.9μs 102KiB 0.2% 33.9KiB

χ0Mixing 9 78.3μs 0.0% 8.70μs 37.0KiB 0.1% 4.11KiB

enforce_real! 1 84.4μs 0.0% 84.4μs 1.69KiB 0.0% 1.69KiB

────────────────────────────────────────────────────────────────────────────────The output produced when printing or displaying the DFTK.timer now shows a nice table summarising total time and allocations as well as a breakdown over individual routines.

Timing measurements have the unfortunate disadvantage that they alter the way stack traces look making it sometimes harder to find errors when debugging. For this reason timing measurements can be disabled completely (i.e. not even compiled into the code) by setting the package-level preference DFTK.set_timer_enabled!(false). You will need to restart your Julia session afterwards to take this into account.

Rough timing estimates

A very (very) rough estimate of the time per SCF step (in seconds) can be obtained with the following function. The function assumes that FFTs are the limiting operation and that no parallelisation is employed.

function estimate_time_per_scf_step(basis::PlaneWaveBasis)

# Super rough figure from various tests on cluster, laptops, ... on a 128^3 FFT grid.

time_per_FFT_per_grid_point = 30 #= ms =# / 1000 / 128^3

(time_per_FFT_per_grid_point

* prod(basis.fft_size)

* length(basis.kpoints)

* div(basis.model.n_electrons, DFTK.filled_occupation(basis.model), RoundUp)

* 8 # mean number of FFT steps per state per k-point per iteration

)

end

"Time per SCF (s): $(estimate_time_per_scf_step(basis))""Time per SCF (s): 0.008009033203124998"Options for parallelization

At the moment DFTK offers two ways to parallelize a calculation, firstly shared-memory parallelism using threading and secondly multiprocessing using MPI (via the MPI.jl Julia interface). MPI-based parallelism is currently only over $k$-points, such that it cannot be used for calculations with only a single $k$-point. Otherwise combining both forms of parallelism is possible as well.

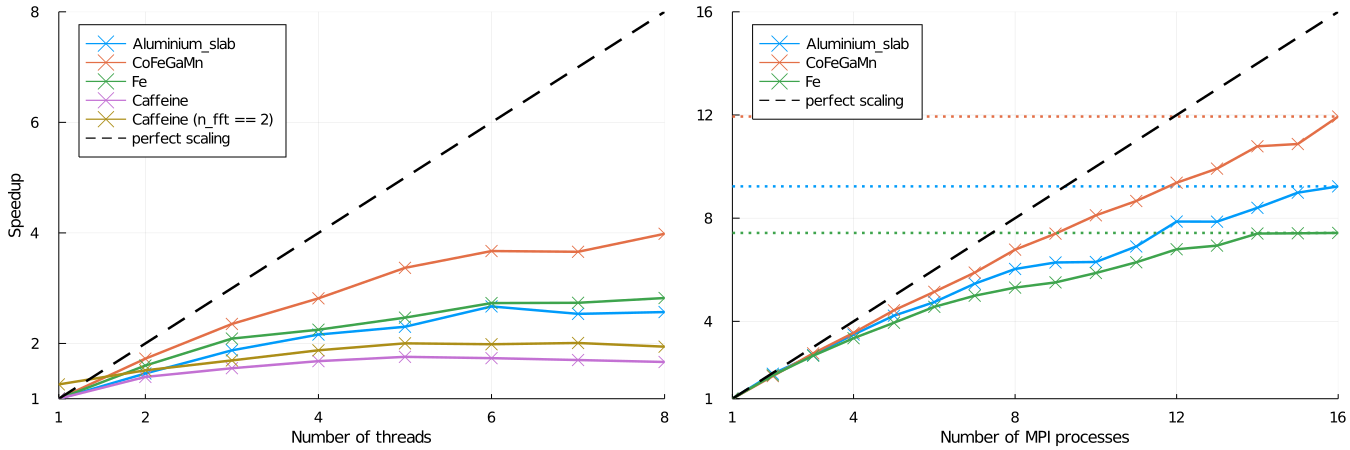

The scaling of both forms of parallelism for a number of test cases is demonstrated in the following figure. These values were obtained using DFTK version 0.1.17 and Julia 1.6 and the precise scalings will likely be different depending on architecture, DFTK or Julia version. The rough trends should, however, be similar.

The MPI-based parallelization strategy clearly shows a superior scaling and should be preferred if available.

MPI-based parallelism

Currently DFTK uses MPI to distribute on $k$-points only. This implies that calculations with only a single $k$-point cannot use make use of this. For details on setting up and configuring MPI with Julia see the MPI.jl documentation.

First disable all threading inside DFTK, by adding the following to your script running the DFTK calculation:

using DFTK disable_threading()Run Julia in parallel using the

mpiexecjlwrapper script from MPI.jl:mpiexecjl -np 16 julia myscript.jlIn this

-np 16tells MPI to use 16 processes and-t 1tells Julia to use one thread only. Notice that we usempiexecjlto automatically select thempiexeccompatible with the MPI version used by MPI.jl.

As usual with MPI printing will be garbled. You can use

DFTK.mpi_master() || (redirect_stdout(); redirect_stderr())at the top of your script to disable printing on all processes but one.

While standard procedures (such as the SCF or band structure calculations) fully support MPI, not all routines of DFTK are compatible with MPI yet and will throw an error when being called in an MPI-parallel run. In most cases there is no intrinsic limitation it just has not yet been implemented. If you require MPI in one of our routines, where this is not yet supported, feel free to open an issue on github or otherwise get in touch.

Thread-based parallelism

Threading in DFTK currently happens on multiple layers distributing the workload over different $k$-points, bands or within an FFT or BLAS call between threads. At its current stage our scaling for thread-based parallelism is worse compared MPI-based and therefore the parallelism described here should only be used if no other option exists. To use thread-based parallelism proceed as follows:

Ensure that threading is properly setup inside DFTK by adding to the script running the DFTK calculation:

using DFTK setup_threading()This disables FFT threading and sets the number of BLAS threads to the number of Julia threads.

Run Julia passing the desired number of threads using the flag

-t:julia -t 8 myscript.jl

For some cases (e.g. a single $k$-point, fewish bands and a large FFT grid) it can be advantageous to add threading inside the FFTs as well. One example is the Caffeine calculation in the above scaling plot. In order to do so just call setup_threading(n_fft=2), which will select two FFT threads. More than two FFT threads is rarely useful.

Advanced threading tweaks

The default threading setup done by setup_threading is to select one FFT thread, and use Threads.nthreads() to determine the number of DFTK and BLAS threads. This section provides some info in case you want to change these defaults.

BLAS threads

All BLAS calls in Julia go through a parallelized OpenBlas or MKL (with MKL.jl. Generally threading in BLAS calls is far from optimal and the default settings can be pretty bad. For example for CPUs with hyper threading enabled, the default number of threads seems to equal the number of virtual cores. Still, BLAS calls typically take second place in terms of the share of runtime they make up (between 10% and 20%). Of note many of these do not take place on matrices of the size of the full FFT grid, but rather only in a subspace (e.g. orthogonalization, Rayleigh-Ritz, ...) such that parallelization is either anyway disabled by the BLAS library or not very effective. To set the number of BLAS threads use

using LinearAlgebra

BLAS.set_num_threads(N)where N is the number of threads you desire. To check the number of BLAS threads currently used, you can use BLAS.get_num_threads().

DFTK threads

On top of BLAS threading DFTK uses Julia threads in a couple of places to parallelize over $k$-points (density computation) or bands (Hamiltonian application). The number of threads used for these aspects is controlled by default by Threads.nthreads(), which can be set using the flag -t passed to Julia or the environment variable JULIA_NUM_THREADS. Optionally, you can use setup_threading(; n_DFTK) to set an upper bound to the number of threads used by DFTK, regardless of Threads.nthreads() (for instance, if you want to thread several DFTK runs and don't want DFTK's threading to interfere with that). This is done through Preferences.jl and requires a restart of Julia.

FFT threads

Since FFT threading is only used in DFTK inside the regions already parallelized by Julia threads, setting FFT threads to something larger than 1 is rarely useful if a sensible number of Julia threads has been chosen. Still, to explicitly set the FFT threads use

using FFTW

FFTW.set_num_threads(N)where N is the number of threads you desire. By default no FFT threads are used, which is almost always the best choice.